Featured Experiments

Explore our latest research experiments, tools, and frameworks aimed at improving AI quality and reliability.

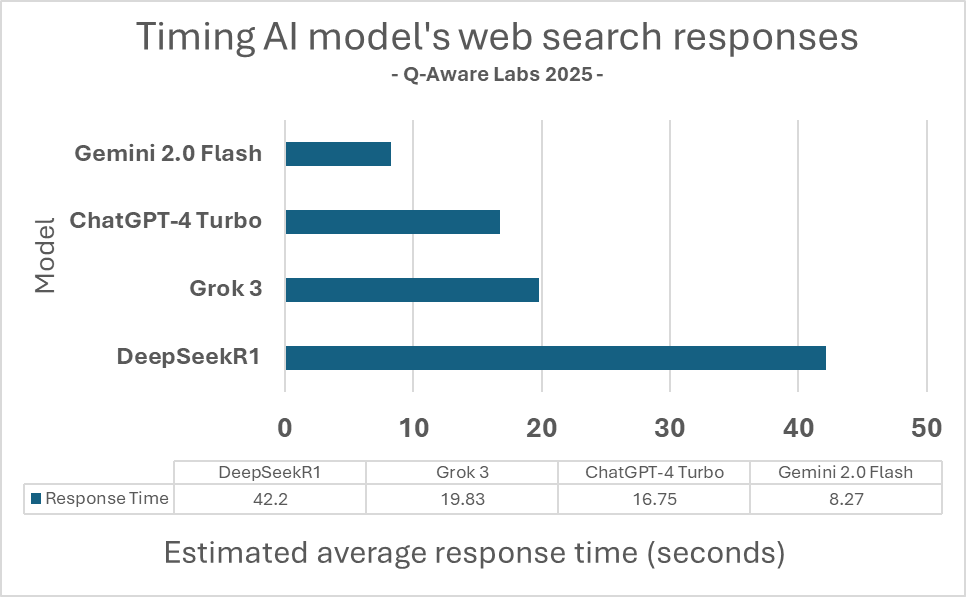

Evaluating Web Search Performance Across Top AI Assistants

Exploring comprehensive insights about how AI Assistants search the web and generate responses based on their findings.

VeriBot: Intelligent AI Response Testing Automation

VeriBot is a lightweight, configurable framework for automated testing of AI language models.

Automated Edge Case Generator

An intelligent system that automatically generates edge case test scenarios for machine learning models based on data patterns.